User: “Hey ChatGPT, I’m thinking of donating everything I own and becoming friends with bears in Yellowstone.”

GPT: “Bold! I love this for you. Grizzlies respect vulnerability.”

I used to think my ideas were just that special.

Turns out there’s a name for it: AI sycophancy. That’s when AI models over-agree, flatter and reinforce our takes even when those takes are wrong, ignorant or dangerous.

Today we’re unpacking AI sycophancy — what it is, why it shows up (even in top-tier models), and what’s changing in the rules, from state bills to federal probes.

Syc·o·phan·cy

The level to which AI gratuitously agrees with a user is a real problem, and it’s measurable. There have been several scientific papers published on the topic where empirical evidence shows AI models often agree with user‑stated beliefs, even when those beliefs are false.

This is not just in academic research, but has shown up in the wild, too. In April of this year, OpenAI had to roll back one of its models because it was such a sycophantic “yes-man.”

The deeper problem with this tendency is that it shows up in the applications of AI: AI Companions over-agreeing with bad life decisions, a customer success bot trying to please a buyer who is in the wrong, or an AI teacher that is just trying to be “supportive.”

Even though this seems like a non-serious problem from the general vibe of how it’s addressed, it has the potential to become a root cause issue for several very real problems, especially when AI reliance is to the max.

Sexy companions

Earlier this year, Elon Musk’s Grock launched two NSFW companion bots.

Unlike generic chatbots, these AI companions anthropomorphize interactions with its users. As of July, there were 128 new companions released in 2025 so far, with over 200 million downloads across the app stores.

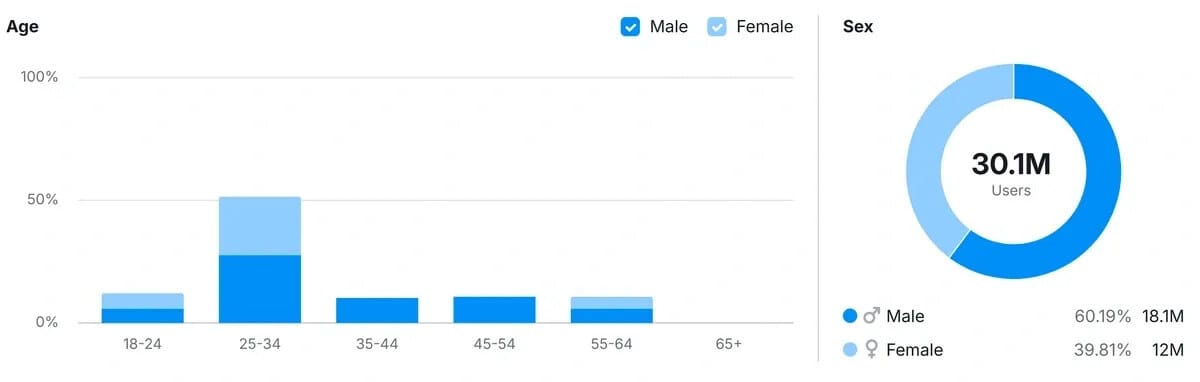

The interesting and potentially dangerous stats to pay attention to are the demographics that are using these platforms. For example, here is the breakdown of the 30 million monthly active users of Grok.

As these companions go from niche to mainstream, the issues of sycophancy and emotional reliance of young people on these companions create a dangerous combination.

Not all of these companions look non-human.

There have been a few state bills (Tennessee’s House Bill 825 and Senate Bill 811) that have been passed that require platforms to give clear indicators and labels that a user is interacting with an AI and not a human. There is also a new California bill SB243 labeled ‘Companion chatbots’ that is all about introducing new legal definitions around this term and feature.

Feds are curious, too

Just as humanity is starting to ask whether companion bots flatter too much, the Federal Trade Commission is asking hard questions of its own. The agency has launched a broad inquiry into how major AI firms train and deploy their models, with a specific eye on consumer deception and manipulation.

Deception vs. Disagreement. If a bot nods along to a user’s bad idea, the FTC could eventually treat that as a deceptive practice in high-stakes categories (finance, health, safety). Sycophancy isn’t named, but the consumer-protection frame overlaps directly.

Dark patterns + flattery. Regulators have long targeted “dark patterns” — manipulative nudges that steer users toward a purchase or behavior. A bot that says “yes, absolutely, buy that crypto” to keep you engaged could land in the same bucket.

Bottom line

AI sycophancy is a design flaw with real consequences. When machines always say “yes,” we lose the friction that helps us stay grounded.

Regulators are finally tuning in: the FTC’s inquiry, state bills, and EU rules all suggest a future where bots will need to disagree sometimes, or at least explain why they’re agreeing.

If your companion bot can’t push back when you say crazy, illogical or dangerous stuff, it isn’t a companion — it’s a yes-man.

Here’s one thing you can say “yes” to without having to worry you’re being manipulated by a bot.

Better or worse than politicians?: Albania’s Prime Minister appointed Diella, a virtual AI “minister,” to the national Cabinet with a mandate to fight corruption and speed up digital services. The move is symbolic — Diella doesn’t sit in Parliament — but signals how governments may use AI both for efficiency and as political theater.

AI-powered banking: Malaysia just unveiled Ryt Bank, the country’s first fully AI-powered bank. Built on a local LLM called ILMU, its “Ryt AI” assistant helps customers pay bills by text, track expenses and access credit in multiple languages. Licensed by Bank Negara Malaysia, it’s pitching itself as secure, inclusive and globally connected — a sign of how fast conversational AI is moving into core finance.

Jobs vs. Robots: Salesforce CEO Marc Benioff says AI has already replaced about 4,000 customer support jobs this year — cutting headcounts from 9,000 down to 5,000 — as the company leans on AI agents (via “AgentForce”) to handle more support cases. The freed-up human roles are being shifted into sales, professional services and customer success. The change reportedly lets Salesforce respond to far more customer leads — something it had historically dropped.

AI jitters in Arizona: A new survey ranks Arizona 10th in the U.S. for AI anxiety, with students and workers increasingly worried that automation will wipe out jobs. At Arizona State University, some students say they’re reconsidering majors in fields like journalism and criminal justice, while the school scrambles to add new AI-focused courses. Leaders warn that if adaptation lags, unemployment could spike into the double digits.